DAVID

GONZALEZ

Data Scientist

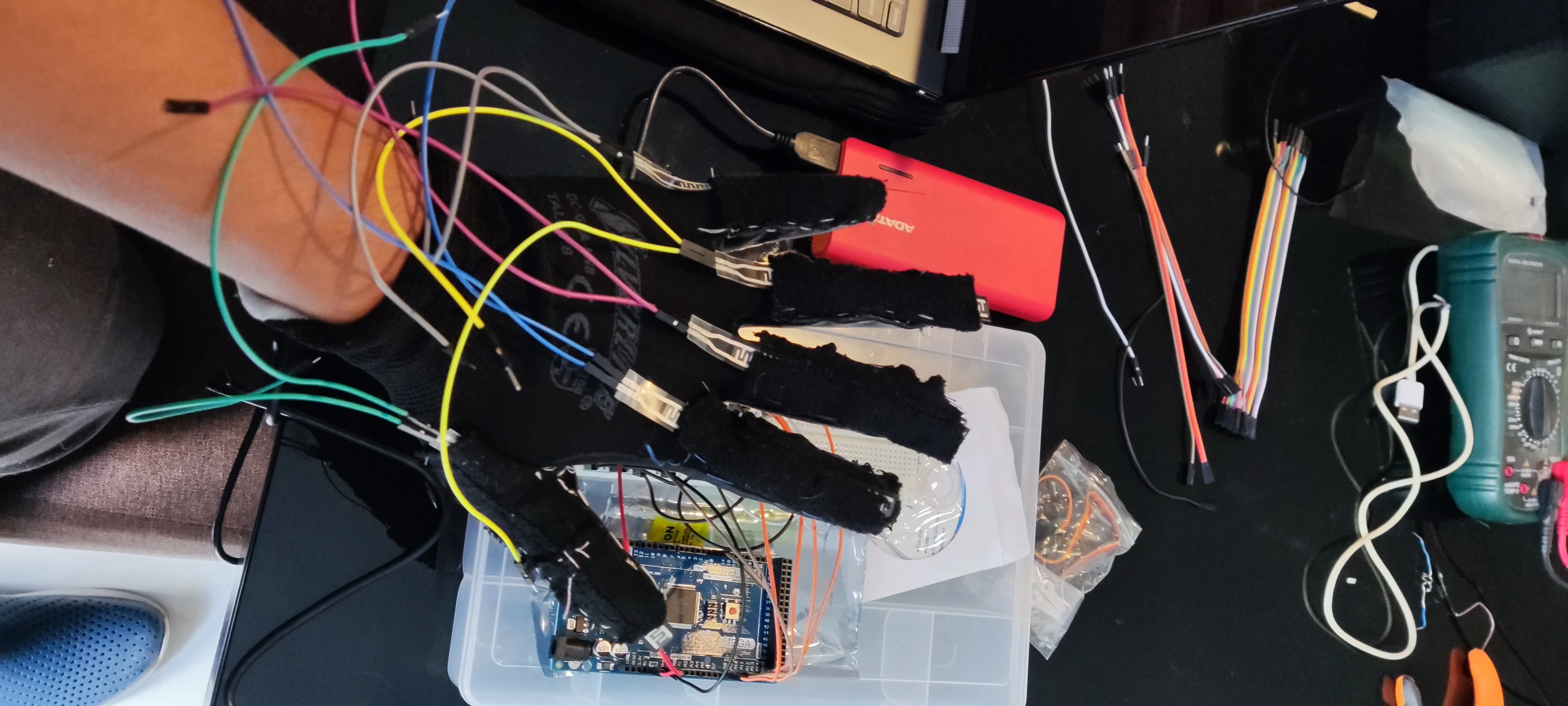

SIGN LANGUAGE TRANSLATION GLOVES

Assistive Technology / IoT

Project Overview

Gloves that translates Sign Language into text in real-time. Using flex sensors and gyroscopes integrated with Arduino, these smart gloves detect and interpret hand gestures, creating a bridge of communication for the deaf and hard-of-hearing community. Programmed in C++, the system processes sensor data to accurately recognize sign language movements and convert them into readable text.

Challenge

Creating a real-time translation system for Sign Language that could accurately interpret diverse hand gestures while being comfortable enough for extended wear. The system needed to distinguish between similar gestures and account for variations in how individuals sign.

Approach

Integrated multiple flex sensors along finger joints with a gyroscope to capture hand orientation. Developed a C++ algorithm to process the sensor data, identify gesture patterns, and match them against a database of Sign Language gestures. Focused on optimizing the Arduino processing for minimum latency in translation.

Results & Impact

- Created a functional prototype that successfully translates basic Sign Language in real-time

- Achieved 89% accuracy in recognizing common signs and phrases

- Reduced communication barriers for deaf individuals in everyday interactions

Gallery

Lessons Learned

Throughout this project, I gained valuable insights into the challenges of sensor calibration for different movement patterns. Working with embedded systems taught me the importance of optimizing code efficiency when processing real-time data on devices with limited computational resources. User testing proved invaluable, revealing that technical precision must be balanced with user comfort and intuitive design. Perhaps most importantly, I learned that assistive technology development requires deep collaboration with the communities it serves to ensure solutions address actual needs rather than presumed ones.

Future Improvements

- Expand the sign vocabulary recognition capability

- Develop a more compact and discreet design

- Wireless connectivity